(NOTE: Check out the other posts in The Secrets of Nim series.)

(NOTE: Check out the other posts in The Secrets of Nim series.)

Did you think I had run out of versions of Nim yet? Today we look at Nim played with dice.

In versions of Nim with dice, the dice aren't rolled. Instead, the number on a given player's turn is consciously chosen by that player. Also, a running total is kept, starting with the first number chosen by the first player, to which is added the consecutive numbers chosen on consecutive turns.

For example, if the first player starts with a 3, then the running total is 3. If the second player were to then choose a 6, the running total would now be 9, because 3 (first turn) + 6 (second turn) = 9. If the first player now chooses a 1, the running total would now be 10, and so on.

Let's start with the simplest of the dice Nim games, which we'll simply call 50. The object, naturally, is to be the first person to make the running total reach 50. If you think of this as a more traditional Nim game with objects like coins or matches, it's like you're allowed to put anywhere from 1 to 6 objects on the table on each turn, and the winner is the person who puts the 50th object down on the table (only this way, the players don't have to keep counting the objects on the table).

So, this would be a standard Nim game, instead of a Misère Nim game, as discussed in part 1 of this series. But what is the strategy here?

If you either try the game out, or understand the principles in the part 1 post, or you simply cheated and ran right to the Single-Pile Nim tab of the Nim Strategy Calculator, it's not hard to see that you should play to get to the one of the following key numbers as soon as possible, and then play to get to the rest: 1, 8, 15, 22, 29, 36, 43, and 50.

Why these numbers? First, they're all 7 apart, which means one player can't move from one key number to the next in a single move. To go from one key number to the next takes at least 2 moves, which means the player going to these key numbers can lock the other player out of these key numbers.

Note that all the key numbers are also 1 greater than a multiple of 7 (36 is 1 greater than 35, which is 7 times 5, etc.). Why is this? It's because the winning total, 50, is 1 greater than a multiple of 7. If the desired total were 51, all your key numbers would be 2 above multiples of 7 (2, 9, 16, 23, 30, 37, 44, and 51).

To see how this changes for a different game, check out this 100 version on Scam School. You can choose numbers from 1 to 10, so how far apart are the key numbers going to be? Correct - They'll be 11 numbers apart. Since 100 is 1 above a multiple of 11, all the key numbers will be 1 above a multiple of 11: 1, 12, 23, 34, 45, 56, 67, 78, 89, and 100. If you like, you can think of this as the same as out first game, but with 10-sided dice (Didn't need to click to see what 10-sided die looks like? HA! RPG Geek test!).

Getting back to 6-sided dice, let's throw a wrench into the works. This time, we'll play to a total of 31 (or to be the first person to force the other player to go OVER 31). However, in this version, you can't just choose any number. The first player rolls their die (or can choose the starting number), but after that, each player can only rotate the die a quarter turn (in other words, 90 degrees in any direction)! What's the best strategy now?

This presents 2 challenges. With a 1 on top, you can't choose a 1 for yourself. Also, since opposite sides of a die equal 7, there's a 6 on the bottom, and you can't choose that, either. For any given turn, you only can only choose from 4 of the 6 numbers, and the available numbers change with the number already on top!

To examine the strategy more closely, you'll need to be clear about the concept of digital roots. Here's a video that explains them clearly:

Surprisingly, our 31 dice game is based entirely on digital roots! Since the digital root of 31 is 4 (31 → 3 + 1 = 4). So, your key numbers for this game are all going to be 4, 13, 22, and 31.

At this point, you're probably a little confused. All those numbers are 9 apart, and you can only choose from numbers in the range of 1 to 6. Worse yet, you can only choose from 4 numbers in that range, and which 4 you can choose keep changing!

To compensate for this, you're going to need to deal with a secondary set of key numbers. This is something new and unusual for Nim, but it's necessary here.

What are these secondary key numbers? These secondary key numbers are 1, 5, and 9, as well as numbers with those as digital roots. Besides 1, 5, and 9, there's also 10, 14, 18, 19, 23, and so on as key numbers. There's a caveat, however. You should only change the total to one of these if you can do so only by adding a 3 or a 4.

Why is that? If you get to, say, 9 by turning to a 4, you've take 4 AND 3 out of play for the next person. Even better, when the other player makes their move, they have to put the 3 and the 4 back into play.

Even this won't cover every possible move, so there's one other secondary key you need to remember. As a last resort when no other moves are possible, get to a number with a digital root of 8, but only if you can do so with a 2 or a 5.

In short, here's the winning strategy for 31:

- Ask yourself: “Can I rotate the die so as to get to a running total with a digital root of 4 (4, 13, 22, or 31)?” If so, do it! If not...

- ...Ask yourself, “Could I rotate the die to a 3 or a 4, so as to get to a running total with a digital root of 1, 5, or 9 (1, 5, 9, 10, 14, 18, 19, 23, 27, or 28)?” If so, do it! If not...

- ...rotate the die to a 2 or a 5, so as to obtain a running total with a digital root of 8 (8, 17, or 26). Assuming the other player hasn't purposely or accidentally made a good move, this one will still be possible.

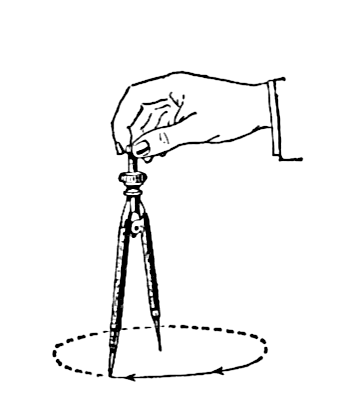

Think about how you draw a circle with a compass. You place the spike at the center of your circle, and rotate the drawing implement around that point. You're taking advantage of the fact that every point on the circle is the same distance from the center of the circle.

Think about how you draw a circle with a compass. You place the spike at the center of your circle, and rotate the drawing implement around that point. You're taking advantage of the fact that every point on the circle is the same distance from the center of the circle.

You've probably received the e-mail that says something like, “July 2011 will have 5 Fridays, 5 Saturdays, and 5 Sundays all in one month. This happens only once in every 823 years.”

You've probably received the e-mail that says something like, “July 2011 will have 5 Fridays, 5 Saturdays, and 5 Sundays all in one month. This happens only once in every 823 years.” Scam School is back, and this week, they're teach one of my all-time favorite mathematical tricks!

Scam School is back, and this week, they're teach one of my all-time favorite mathematical tricks! This month's snippets are all about online widgets, apps, and even toys that help you understand new concepts more effectively.

This month's snippets are all about online widgets, apps, and even toys that help you understand new concepts more effectively. Before I wind this series up and tie everything together, it's time to go back to the beginning. Not the beginning of this series, but to the beginning of our desire to understand the universe.

Before I wind this series up and tie everything together, it's time to go back to the beginning. Not the beginning of this series, but to the beginning of our desire to understand the universe. Since we've talked about

Since we've talked about  Now we've

Now we've